|

AIfES 2

2.0.0

|

|

AIfES 2

2.0.0

|

This tutorial should explain how the different components of AIfES 2 work together to train a simple Feed-Forward Neural Network (FNN) or Multi Layer Perceptron (MLP). If you just want to perform an inference with pretrained weights, switch to the inference tutorial.

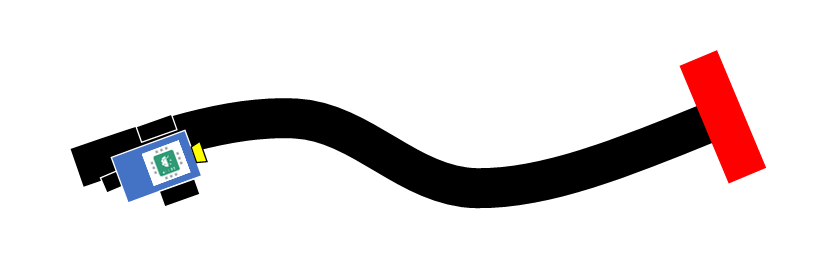

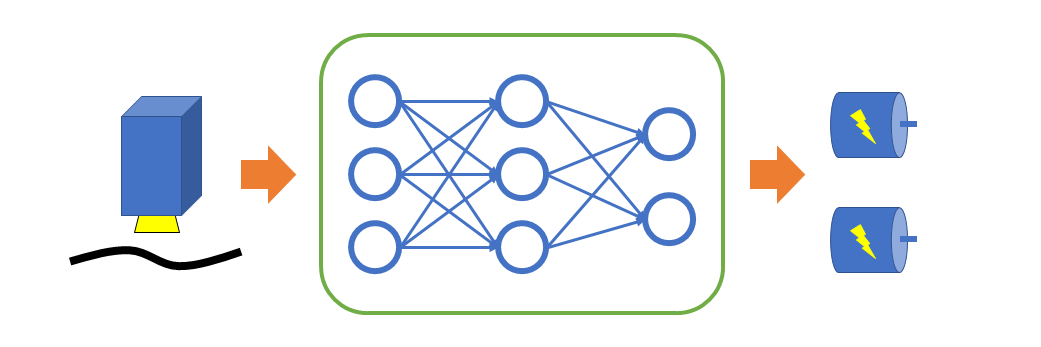

As an example, we take a robot with two powered wheels and a RGB color sensor that should follow a black line on a white paper. To fulfill the task, we map the color sensor values with an FNN directly to the control commands for the two wheel-motors of the robot. The inputs for the FNN are the RGB color values scaled to the interval [0, 1] and the outputs are either "on" (1) or "off" (0) to control the motors.

The following cases should be considered:

The resulting input data of the FNN is then

| R | G | B |

|---|---|---|

| 0 | 0 | 0 |

| 1 | 1 | 1 |

| 1 | 0 | 0 |

and the output should be

| left motor | right motor |

|---|---|

| 1 | 0 |

| 0 | 1 |

| 0 | 0 |

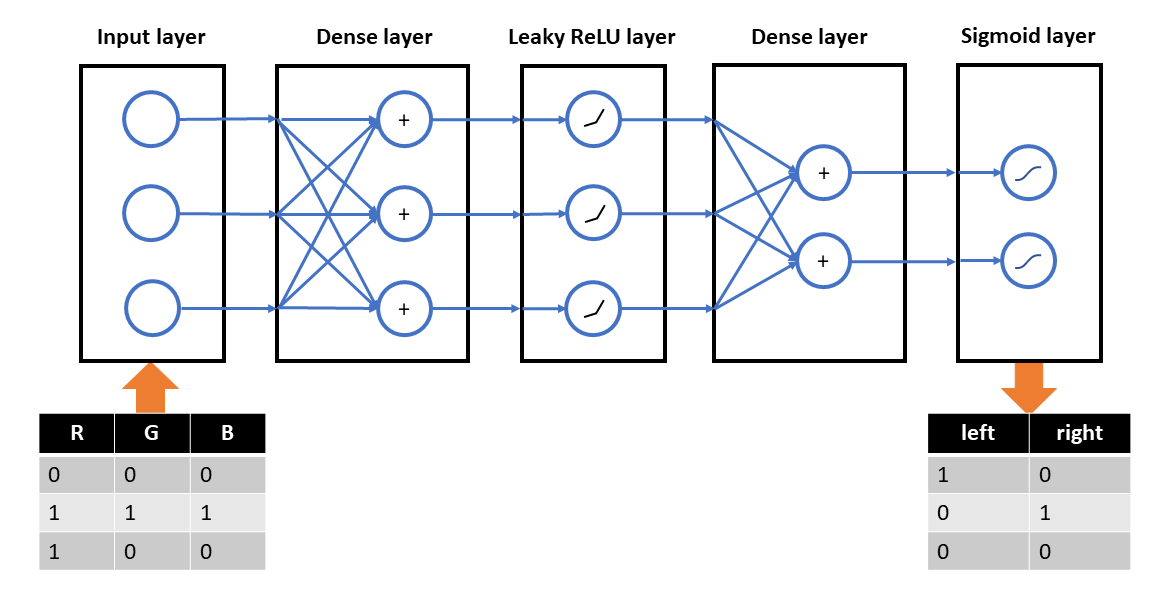

To set up the FNN in AIfES 2, we need to design the structure of the neural network. It needs three inputs for the RGB color values and two outputs for the two motors. Because the task is rather easy, we use just one hidden layer with three neurons.

To create the network in AIfES, it must be divided into logical layers like the fully-connected (dense) layer and the activation functions. We choose a Leaky ReLU activation for the hidden layer and a Sigmoid activation for the output layer.

AIfES provides implementations of the layers for different data types that can be optimized for several hardware platforms. An overview of the layers that are available for training can be seen in the overview section of the main page. In the overview table you can click on the layer in the first column for a description on how the layer works. To see how to create the layer in code, choose one of the implementations for your used data type and click on the link.

In this tutorial we work with the float 32 data type (F32 ) and use the default implementations (without any hardware specific optimizations) of the layers.

Used layer types:

Used implementations:

For every layer we need to create a variable of the specific layer type and configure it for our needs. See the documentation of the data type and hardware specific implementations (for example ailayer_dense_f32_default()) for code examples on how to configure the layers.

Our designed network can be declared with the following code

We use the initializer macros with the "_A" at the end, because we call aialgo_distribute_parameter_memory() later on to set the remaining paramenters (like the weights) automatically.

Afterwards the layers are connected and initialized with the data type and hardware specific implementations

Because AIfES doesn't allocate any memory on its own, you have to set the memory buffers for the trainable parameters like the weights and biases manually. Therefore you can choose fully flexible, where the parameters should be located in memory. To calculate the required amount of memory by the model, the aialgo_sizeof_parameter_memory() function can be used or the size can be calculated manually (See the sizeof_paramem() functions of every layer for the required amount of memory and the set_paramem() function for how to set it to the layer).

With aialgo_distribute_parameter_memory() a memory block of the required size can be distributed and set to the different trainable parameters of the model.

A dynamic allocation of the memory using malloc could look like the following:

You could also pre-define a memory buffer if you know the size in advance, for example

To see the structure of your created model, you can print a model summary to the console

The created FNN can now be trained. AIfES provides the necessary functions to train the model with the backpropagation algorithm.

To calculate the gradients for the backpropagation training algorithm, a loss function must be configured to the model. An overview of available losses can be seen in the overview section of the main page. In the overview table you can click on the loss in the first column for a description on how the loss works. To see how to create the loss in code, choose one of the implementations for your used data type and click on the link.

In this tutorial we work with the float 32 data type (F32 ) and use the default implementation (without any hardware specific optimizations) of the loss. We choose the Cross-Entropy loss because we have sigmoid outputs of the network and binary target data. (Cross-Entropy loss will not work for other output layers than sigmoid or softmax!)

Used loss type:

Used implementation:

The loss can be declared, connected and initialized similar to the layers, by calling

You can print the loss configuration to the console for debugging purposes with

To update the parameters with the calculated gradients, an optimizer must be created. An overview of available optimizers can be seen in the overview section of the main page. In the overview table you can click on the optimizer in the first column for a description on how the loss works. To see how to create the optimizer in code, choose one of the implementations for your used data type and click on the link.

In this tutorial we work with the float 32 data type (F32 ) and use the default implementation (without any hardware specific optimizations) of the optimizer. We choose the Adam optimizer that converges really fast to the optimum with just a small memory and computation overhead. On more limited systems, the SGD optimizer might be a better choice.

Used optimizer type:

Used implementation:

The optimizer can be declared and configured by calling

in C or

in C++ and on Arduino. Afterwards it can be initialized by

You can print the optimizer configuration to the console for debugging purposes with

The trainable parameters like the weights and biases have to be initialized before the training. There are different initialization techniques for different models. For a simple FNN, the recommended initialization of the weights and biases of the dense layers are dependent on the connected activation functions

| Activation function | Weights-init | Bias-init |

|---|---|---|

| None, tanh, sigmoid, softmax | Glorot | Zeros |

| ReLU and variants | He | Zeros |

| SELU | LeCun | Zeros |

In our example, the two dense layers can be initialized manually like described in the recommendation table (uniform distribution versions)

AIfES can also automatically initialize the trainable parameters of the whole model with the default initialization methods configured to the layers.

Because AIfES doesn't allocate any memory on its own, you have to set the memory buffers for the training manually. This memory is required for example for the intermediate results of the layers, for the gradients and as working memory for the optimizer (for momentums). Therefore you can choose fully flexible, where the buffer should be located in memory. To calculate the required amount of memory for the training, the aialgo_sizeof_training_memory() function can be used.

With aialgo_schedule_training_memory() a memory block of the required size can be distributed and scheduled (memory regions might be shared over time) to the model.

A dynamic allocation of the memory using malloc could look like the following:

You could also pre-define a memory buffer if you know the size in advance, for example

Before starting the training, the scheduled memory must be initialized. The initialization sets for example the momentums of the optimizer to zero.

To perform the training, the input data and the target data / labels (and also the test data if available) must be packed in a tensor to be processed by AIfES. A tensor in AIfES is just a N-dimensional array that is used to hold the data values in a structured way. To do this, create 2D tensors of the used data type. The shape describes the size of the dimensions of the tensor. The first dimension (the rows) is the batch dimension, i.e. the dimension of the different training samples. The second dimension equals the inputs / outputs of the neural network.

Because in this case we are just interested in the outputs of the FNN on our training dataset, our test data is just the training data.

Now everything is ready to perform the actual training. AIfES provides the function aialgo_train_model() that performs one epoch of training with mini-batches. One epoch means that the model has seen the whole data ones afterwards. The function aialgo_calc_loss_model_f32() can be used to calculate the loss on the test data between the epochs.

An example training with these functions that trains the model for a pre-defined amount of epochs might look like this:

If you like to have more control over the training process, you can also use the more advanced functions

The call order of the functions in pseudo code could look like this

For a code example, see the source code of the aialgo_train_model() function.

To test the trained model you can perform an inference on the test data and compare the results with the targets. To do this you can call the inference function aialgo_inference_model().

Afterwards you can print the results to the console for further inspection:

If you are satisfied with your trained model, you can delete the working memory created for the training (if it was created dynamically). For further inferences / usage of the model you should assign a new memory block, because the big training memory is not needed anymore. Use aialgo_schedule_inference_memory() to assign a (much smaller) memory block to the model that is made for inference. The required size can be calculated with aialgo_sizeof_inference_memory().

Example:

Or