|

AIfES 2

2.0.0

|

|

AIfES 2

2.0.0

|

Welcome to the official AIfES 2 documentation! This guideline should give you an overview of the functions and the application of AIfES. The recommendations are based on best practices from various sources and our own experiences in the use of neural networks.

AIfES 2 is a modular toolbox designed to enable developers to execute and train an ANN on resource constrained edge devices as efficient as possible with as little programming effort as possible. The structure is closely based on python libraries such as keras and pytorch to make it easier to get started with the library.

The AIfES basic module contains currently the following features:

Layer:

Algorithmic:

Layer:

Loss:

| Loss | f32 | q31 | q7 |

|---|---|---|---|

| Mean Squared Error (MSE) | ailoss_mse_f32_default() | ailoss_mse_q31_default() | |

| Crossentropy | ailoss_crossentropy_f32_default() ailoss_crossentropy_sparse8_f32_default() |

Optimizer:

| Optimizer | f32 | q31 | q7 |

|---|---|---|---|

| Stochastic Gradient Descent (SGD) | aiopti_sgd_f32_default() | aiopti_sgd_q31_default() | |

| Adam | aiopti_adam_f32_default() |

Algorithmic:

High level functions to build a simple multi layer perceptron / fully-connected neural network with a few lines of code.

To help you to get your neural network models from Python to AIfES and to perform the weights quantization to integer types, we provide some AIfES Python tools.

You can install the tools via pip from our GitHub repository with:

pip install https://github.com/Fraunhofer-IMS/AIfES_for_Arduino/raw/main/etc/python/aifes_tools.zip

You can have a look at the automatic quantization example to see how the Python tools can be used to quantize a model to Q7 integer type.

AIfES was designed as a flexible and extendable toolbox for running and training or artificial neural networks on microcontrollers. All layers, losses and optimizers are modular and can be optimized for different data types and hardware platforms.

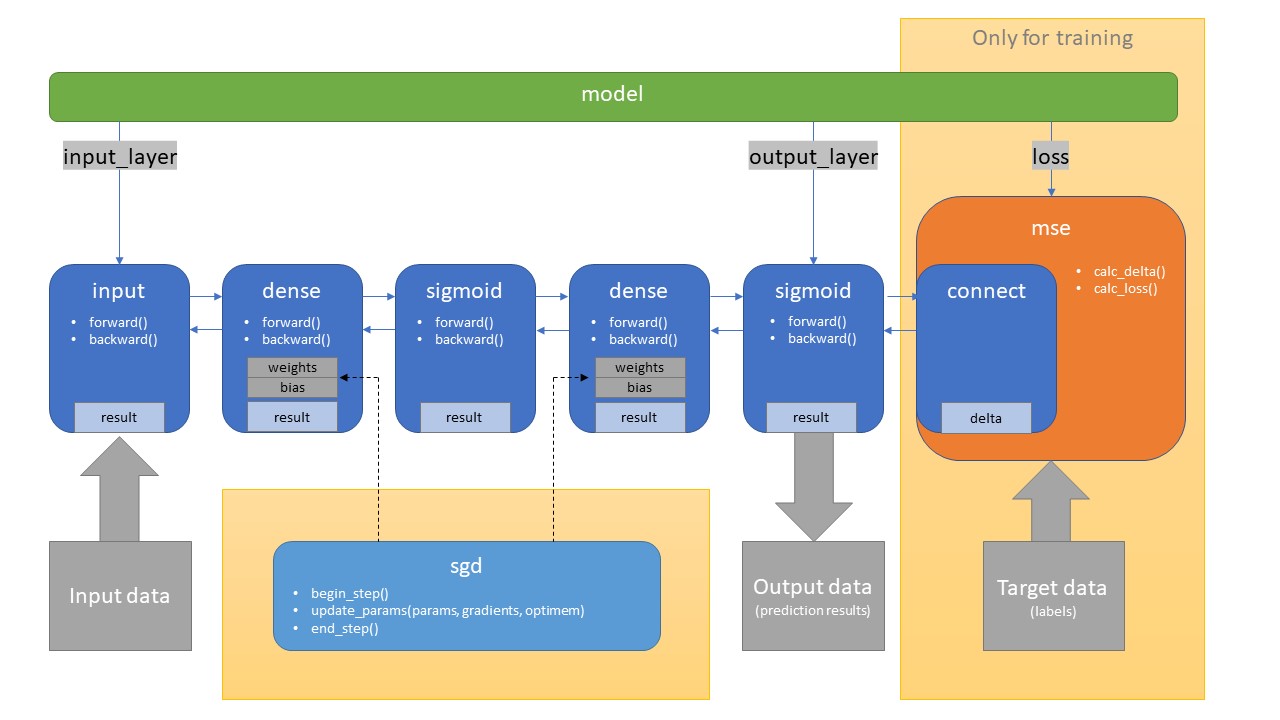

Example structure of a small FNN with one hidden layer in AIfES 2:

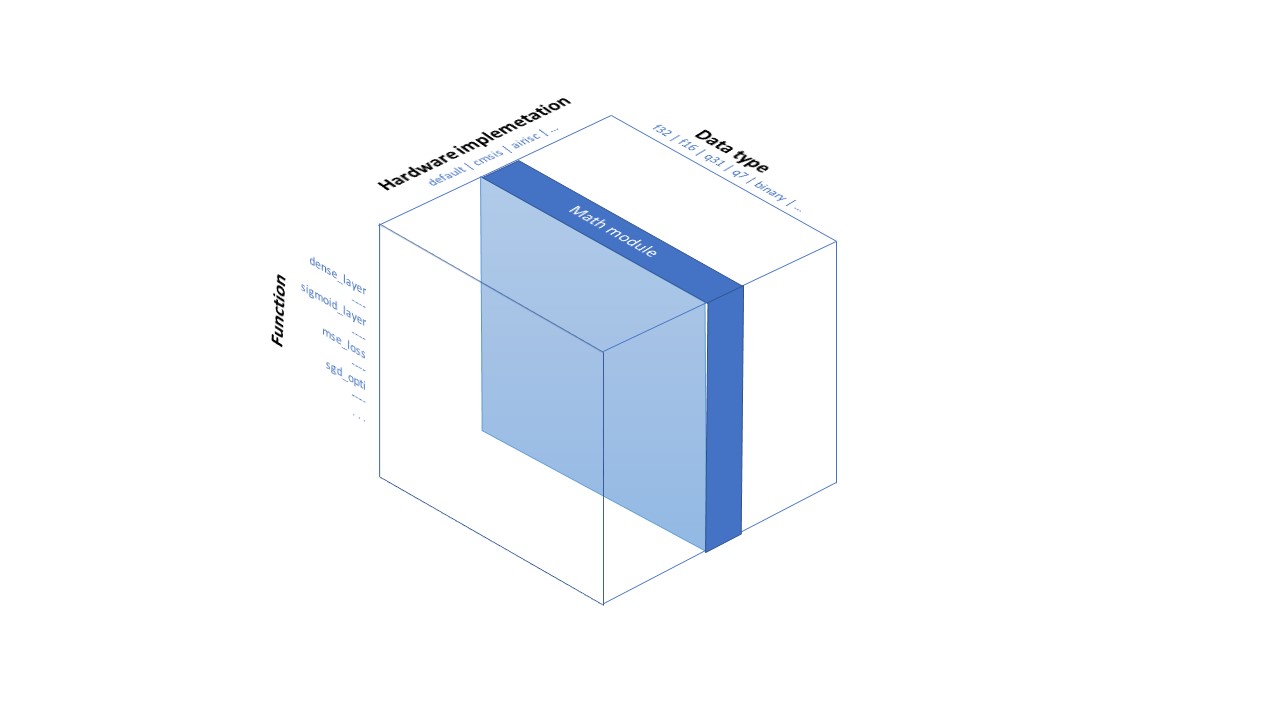

Modular structure of AIfES 2 backend: